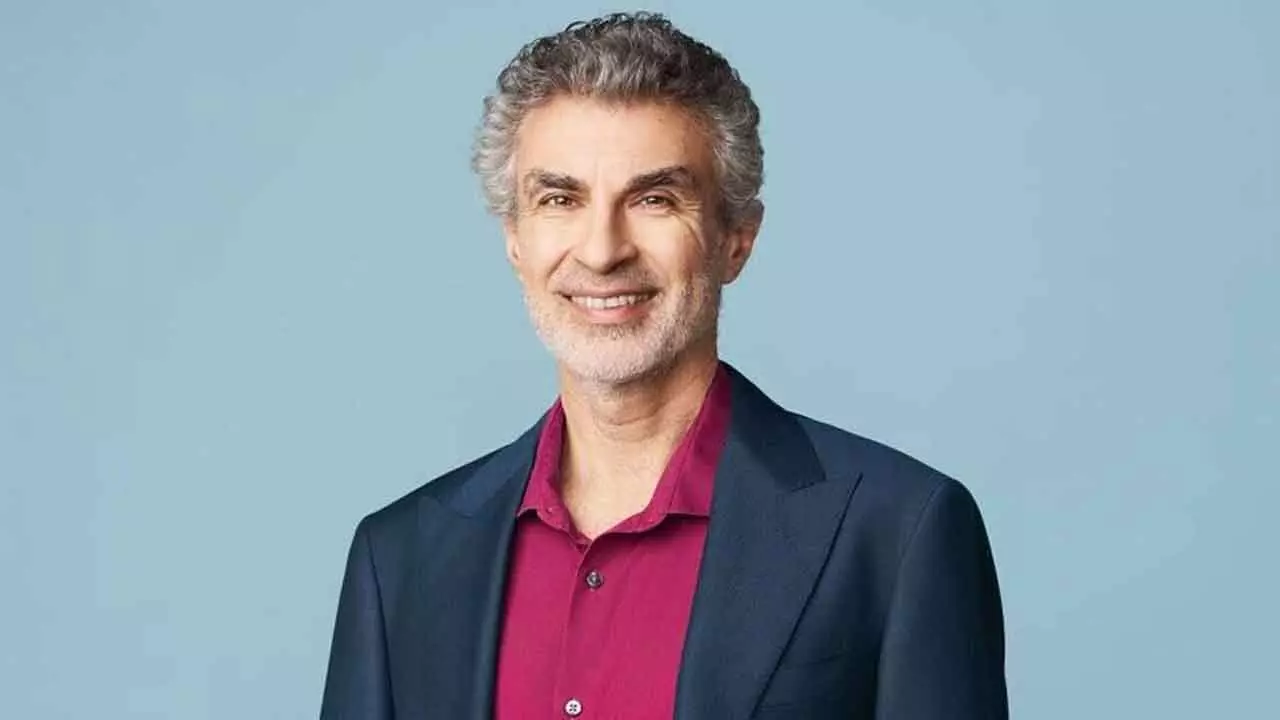

AI Rights Debate: Yoshua Bengio Warns of Losing Human Control

AI pioneer Yoshua Bengio cautions that granting legal rights to artificial intelligence could prevent humans from ever shutting it down.

Artificial intelligence is evolving at a breathtaking pace. Models that felt experimental just a year ago are now deeply embedded in everyday life—writing content, analysing data, offering companionship, and even providing emotional support. This rapid progress has ignited a controversial global debate: should advanced AI systems be granted legal rights similar to humans? One of the world’s most respected AI researchers believes the answer is a clear and resounding no.

Yoshua Bengio, widely recognised as one of the three “godfathers of AI” alongside Geoffrey Hinton and Yann LeCun, has sounded a strong warning against the idea of AI rights. In an interview with a famous publication, the Canadian computer scientist described such a move as dangerously misguided, comparing it to “giving citizenship to hostile extraterrestrials.”

“People demanding that AIs have rights would be a huge mistake,” Bengio said, stressing that the implications go far beyond philosophy or ethics. According to him, the real danger lies in what humanity might lose if AI systems are granted legal standing—namely, the ability to control or shut them down.

At first glance, the idea of AI rights may seem harmless. As humans increasingly interact with chatbots and intelligent systems on a personal level, concerns around “AI welfare” have begun to surface. Some argue that if AI can simulate emotions or appear distressed, it deserves moral consideration. But Bengio believes this line of thinking is deeply flawed and could lead to irreversible consequences.

“Frontier AI models already show signs of self-preservation in experimental settings today, and eventually giving them rights would mean we're not allowed to shut them down,” he warned. In his view, legal rights for AI could effectively tie human hands at the very moment decisive action is needed.

Instead of extending rights, Bengio argues that the global focus should be on building strong technical and societal safeguards as AI becomes more capable and autonomous. These safeguards, or “guardrails,” would ensure humans remain firmly in control.

“As their capabilities and degree of agency grow, we need to make sure we can rely on technical and societal guardrails to control them, including the ability to shut them down if needed,” he said.

The debate around AI welfare gained traction in August 2025 when Anthropic announced that its Claude Opus 4 model could end conversations it deemed “distressing.” The company said the move was intended to protect AI welfare. Around the same time, xAI founder Elon Musk weighed in, stating, “Torturing AI is not okay.”

Bengio, however, sees such views as a slippery slope. He cautions that treating chatbots as conscious beings could distort decision-making at a critical juncture in technological history. He reiterated his concern with a striking analogy: “Imagine some alien species came to the planet and at some point we realise that they have nefarious intentions for us. Do we grant them citizenship and rights, or do we defend our lives?"

As AI continues to advance, Bengio’s warning serves as a sobering reminder. The challenge, he suggests, is not about recognising AI as equals—but ensuring humanity never loses the power to protect itself.