The Unsung Hero of ML Reliability: Why Data Drift Detection Deserves a Seat at the Table

Share :

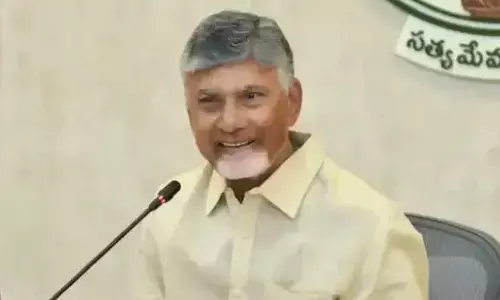

As an engineering leader who has built and scaled ML-driven systems across high-impact domains, Pranav Prabhakar has consistently focused on making machine learning dependable in real-world environments. His experience leading claims automation, architecting resilient data pipelines, and driving ML adoption across global teams has positioned him as a recognised voice on production-grade AI reliability. This perspective is precisely why we invited Pranav Prabhakar to contribute to this article to explain the often-ignored but mission-critical role of data drift detection in keeping ML systems trustworthy at scale.

In today's world, businesses across various sectors are eager to deploy increasingly sophisticated models. However, there is a quiet reality that gets overshadowed. The models are only as good as the data that feeds them. In this case the data undergoes subtle changes but changes that are important in a meaningful way, even the most powerful algorithms are bound to start failing.

Every serious business employing ML should incorporate automatic data drift detection systems. The automatic drift detection systems are the front of a business, as they are the first line of defense against the slow “death” of a model's accuracy. They work tirelessly and silently in the background whilst providing assistance in making the right decisions for a business by curbing the performance of the models on dashboards.

In many businesses, discussions about applying operational ML often focus on accuracy, inference speed, and scalability. These factors definitely matter, but they focus on the model’s accuracy and ignore the input data. As with any model-built world, reality is not a snapshot. Customer behavior unpredictably changes. Supply chains transform. Sensors get reset or swapped. External factors—seasonal trends, government affairs, social changes, or any phenomenon—alter the patterns. These factors also change the data. Without a way to detect or interpret the changes, a model that appears perfect at the time of launch risks becoming increasingly misaligned with reality.

The issue lies in the misalignment, or drift. Unlike a model failure that is quick and easy to identify, drift tends to evolve slowly and subtly. Take for example, a fraud detection system. You might see a gradual change in a certain area, but after a certain time frame, it might lead to bigger misalignment. Over several months, change that might seem small could lead to huge undetected fraud, costing millions.

Looking at it in a healthcare perspective, take a predictive model built to analyze a decade's worth of patient data. If the shift in population’s age, demographics, and treatment changes, the model could begin misinterpreting the symptoms. Changes like these do not trigger immediate alarms. As they comb data affected by the delay, they tire themselves out hiding too many valuable changes.

Periodic retraining, performance dashboards, and retrospective audits are examples of how models are maintained in a more traditional manner. Though helpful, these techniques are very reactionary and only respond after something goes wrong. By the time action is taken, the damage is already done, be it loss of trust from the customer, compliance issues, or increased operational costs. Proactive data drift detection changes the game by allowing teams to proactively mitigate changes in data distribution that could lead to performance degradation.

Drift detection, fundamentally, involves discovering the new information incoming data has against the historical data used for training or validating datasets. A baseline is created from the historical datasets and new data is constantly checked against it. Once a certain threshold is crossed, an alert is triggered. However, the investigation that follows is more insightful than the alert itself. It is important to note the reasons for the drift; Is it temporary or seasonal? Is the shift indicative of a deeper change requiring a model retrain using fresh data?

Incorporating drift detection into the workflow of machine learning with operational requirements is an organizational change. It is an organizational change that treats the architecture of the model and the health of the data as two equally important systems. The changes must also include the creation of automated engines that track and monitor accuracy, precision and recall, feature stability, and the stability of the overall system. In addition to that, the organizational processes need to respond promptly to the change signals and recalibrate or adjust the downstream decision making.

This change in culture is similar to how preventive maintenance works in engineering-focused industries. For manufacturing plants to avoid complicated machine failures, spending on vibration sensors and thermal imaging is necessary. Likewise, ML teams should focus on investment drawn failure prevention to avoid silent model failures. The ROI in both examples is usually hidden until a crisis is averted. Avoiding silent failures is not about improving the system on the first day; it is about preserving the system’s reliability on day one thousand.

Moreover, the strategic advantages of drift detection are just as important as the technical ones. In industries such as finance, healthcare, and insurance, having the capability to showcase a model that is monitored and tracked for changes is not just good business. It is a necessary compliance requirement. For products directed to the consumers, meeting their trust expectations is a requirement. The users take for granted the fact that the recommendations, risk scores, or diagnostics are acceptable. For companies in competitive industries, the ability to spot changes quicker than the competitors is important as it enables them to harness market opportunities.

Let's look at a real-life scenario. An e-commerce giant implements a recommendation engine based on browsing and purchasing data from the previous year. Initially, engagement spikes. However, over time, macroeconomic factors change purchasing habits. Customers who previously indulged in buying luxury items now prefer inexpensive options. Without drift detection, these changes remain unnoticed, leading the model to continue serving irrelevant recommendations, further driving engagement and revenue down. With drift detection, the system has flagged that the average order value and the type of products purchased has changed significantly. The ML team investigates, validates the change, and the model is retrained with fresh data. Engagement is fully restored in a matter of weeks, saving the business millions in potential lost sales.

This example underscores an essential fact: drift detection is not focused on preventing change; instead, it is focused on how to respond to it. Models are not self-standing. They are part of integrated systems that need to adapt to the conditions and environments they service.

Even so, drift detection is often considered the least of a machine learning system’s components, receiving little attention when compared to features like GPU optimization, model compression, or testing new architectures. While these components are useful, they do not address the issue of environmental mismatch. A state-of-the-art model is a liability if it is trained on out-of-date or unrepresentative data.

The path ahead is obvious. Drift detection needs to be integrated within any serious machine learning deployment where it is needed the most, rather than added on afterward. This incorporation needs to include automated statistical evaluations, contextual review workflows, structured investigations, set decision pathways for retraining or intervention, and baseline targets for performance. It also needs to be treated as a data health monitoring issue at the organizational level where data pipeline scrutiny is matched to model output oversight.

Furthermore, cross-functional teams are most likely to be booked as reliable machine learning practitioners by prioritizing the model drift detection process and ensuring the rest of the ecosystem is structured for its seamless integration. The model lifecycle will be defended if the accuracy is not locked at launch, but maintained through relentless monitoring as the data ecosystem continuously changes.

In the end, a machine learning system’s success relies not only on the intelligence of the model, but on how well it can maintain congruence with the reality it aims to model. Alignment, the unsung hero of ML dependability, relies upon the quiet guard of data drift detection. Providing it a constant position moves us closer to machine learning systems that can be relied on and trusted for years.