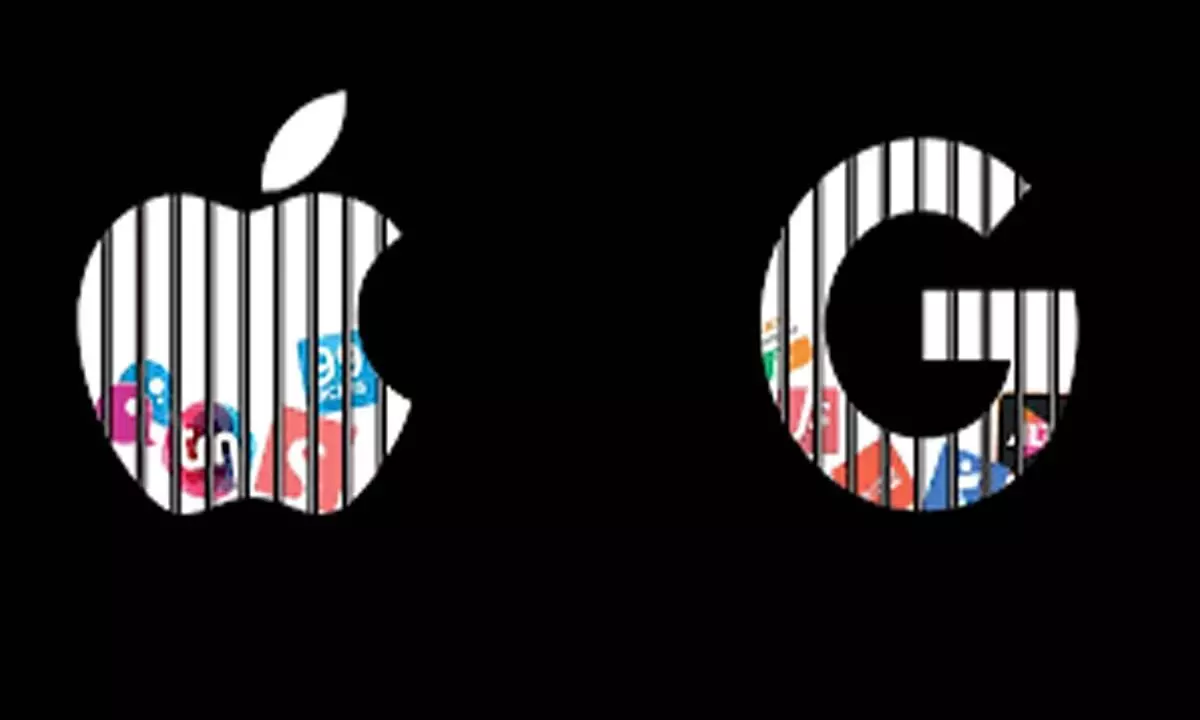

Google, Apple Under Fire As Dozens of AI ‘Nudify’ Apps Slip Through Store Policies

Dozens of AI-powered “nudify” apps bypassed Google and Apple safeguards, raising serious concerns over user safety and platform accountability.

Despite strict review systems, Google and Apple are facing criticism after reports revealed that dozens of AI-powered “nudify” apps were available on their official app stores, raising fresh concerns about content moderation and user protection.

Both tech giants promote their marketplaces as safe and tightly regulated ecosystems. However, new findings suggest that several apps capable of generating altered or partially undressed images from uploaded photos managed to slip past these safeguards. The issue has sparked debate over how thoroughly such apps are vetted before reaching millions of users.

The controversy follows heightened scrutiny around generative AI tools, including Grok, which recently drew attention for allegedly producing explicit images of real people based on user prompts. Now, similar tools appearing freely on mainstream app stores have intensified worries among experts and watchdog groups.

According to a report by the Tech Transparency Project (TTP), more than 50 apps on Google’s Play Store and 45 on Apple’s App Store were identified as offering AI-driven image manipulation features that digitally remove or alter clothing in photos. Researchers noted that many of these apps were easy to discover through simple keyword searches, with some ranking prominently in store results.

What troubled investigators most was the scale of adoption. Collectively, these apps are said to have amassed around 700 million downloads worldwide. The developers behind them have reportedly generated significant revenue through subscriptions, in-app purchases, and advertisements.

The accessibility of these tools has prompted questions about enforcement of platform rules. Both Google and Apple maintain policies that prohibit apps promoting exploitative or non-consensual content. Yet, critics argue that the presence of such apps indicates gaps in screening processes.

After receiving the TTP’s findings, both companies reportedly acted against several developers. As cited by CNBC, Google and Apple said they have removed or suspended offending apps and accounts for violating store guidelines. Still, observers say reactive measures may not be enough, calling for stronger pre-approval checks and more proactive monitoring.

The broader incident highlights the growing challenges posed by AI-based image technologies. As these tools become more sophisticated and easier to deploy, distinguishing between legitimate creative applications and harmful uses is becoming increasingly complex for regulators and platforms alike.

Meanwhile, Google continues to expand its own AI offerings. This week, the company rolled out enhanced AI-powered editing tools in the Google Photos app to more users. Initially introduced with Pixel 10 devices in the US, the feature allows users to modify images using text or voice commands. The updated design, expected alongside Android 16, includes a Gemini-powered editing bar that simplifies automated photo adjustments.

While such innovations demonstrate AI’s potential for creativity and convenience, the recent app store controversy underscores the need for tighter oversight to ensure these technologies are not misused.

As generative AI becomes mainstream, maintaining a balance between innovation and responsibility remains a pressing challenge for the industry.