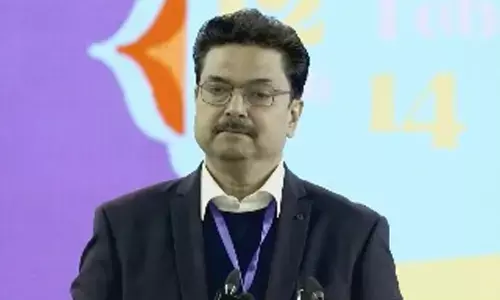

Praveen Kodakandla: Advancing AI, Cloud, and Big Data Through Scalable, Secure, and Ethical Engineering

Praveen Kodakandla: Advancing AI, Cloud, and Big Data Through Scalable, Secure, and Ethical Engineering

Stay informed with The Hans India – your trusted source for the latest news, entertainment, sports, lifestyle, and technology updates from Telangana, Andhra Pradesh, and beyond.

In a data-driven era where performance, privacy, and cost must coexist, Praveen Kodakandla has built a career on frameworks that scale, endure, and earn trust. With more than two decades of experience, he focuses on production-grade systems that move beyond theory, modernizing data platforms, advancing privacy-centric AI, and enabling real-time decisioning for enterprises in healthcare, retail, and digital services.

Rather than chasing trends, Kodakandla prioritizes durable engineering. His work spans petabyte-scale modernization, real-time analytics pipelines, and AI-powered privacy architectures that detect and manage sensitive data at scale. Across roles, he has led migrations, refactors, and platform upgrades that keep businesses compliant and competitive while improving operational efficiency.

Research That Moves the Needle

AI-Driven Privacy Frameworks: Detecting and Remediating Sensitive Data in Distributed Systems (2025) (view)

Kodakandla’s 2025 research outlines an AI-driven privacy framework designed for distributed environments spanning clouds, edge devices, and hybrid systems. It uses advanced AI to identify PII, PHI, financial data, and similar categories, then automates redaction, encryption, and access control at the source. The privacy components are packaged as microservices for real-time processing of data arriving from mobile devices and edge servers.

A standout of this work is federated learning, allowing models to evolve independently across nodes without surrendering data ownership, improving anomaly detection while maintaining compliance. Reported results include detection success rates of 94-97% and sub-120ms reactions, aligned with GDPR, HIPAA, and CCPA. The framework adapts quickly to new data types, situational changes, and policy updates, and is built to integrate with DevOps/MLOps tools for enterprise deployment.

Designing Secure and High-Performance Cloud Systems for Proprietary Data Protection (2022) (view)

Amid widespread cloud adoption, this 2022 work presents a design-first approach to security and performance for proprietary data. It examines the evolution of Zero Trust security, AES encryption, load balancing, and scalability, proposing multi-layered defenses, continuous monitoring, and adaptive encryption strategies, while weighing cost, complexity, user impact, ethics, and regulatory requirements (including GDPR and HIPAA). The paper also highlights future directions such as AI in security analysis, quantum-safe cryptography, and edge computing, emphasizing that robust security need not slow systems when built into the architecture from the start.

Cross-Cloud Data Engineering: Unified Big Data Workflows with AWS and GCP (2021) (view)

As organizations increasingly diversify providers, this 2021 contribution details the principles, tools, and patterns for unified big data workflows across AWS and GCP. It addresses data integration, service coordination, security, and observability in cross-cloud settings, framing how teams can optimize cost and performance while meeting compliance obligations. Through a real-world case, it shows how to create scalable, fault-tolerant, and secure pipelines that leverage cloud-agnostic platforms, AI-based orchestration, and responsible cost management.

Building Enterprise Systems That Last

Kodakandla’s research is inseparable from his day-to-day engineering leadership. He has implemented large-scale data protection systems that scan pipelines for exposure risks, established modular ingestion frameworks used across teams, and mentored engineers who now lead major initiatives. His portfolio includes modernization of legacy platforms, real-time analytics, and privacy-aware AI, each informed by production constraints and regulatory rigor.

A recurring theme is efficiency. In “Designing an Incremental Data Ingestion Framework with Apache Spark: Efficiency at Scale,” Kodakandla describes a Spark-based framework that replaces full-batch workflows with incremental loads, cutting compute costs and improving data availability. This approach underpins enterprise pipelines, particularly in retail and finance, where timely ingestion supports customer experience and fraud detection.

In “Hybrid Data Architecture: Managing Cost and Performance Between On-Premises and Cloud Systems,” he details patterns for balancing low-latency, real-time workloads with economical cloud storage tiers for archival data. These designs reflect practical success migrating over 800 TB of Hadoop workloads to the cloud while maintaining SLAs and reducing licensing costs.

Kodakandla’s cross-domain thinking is captured in “Unified Analytics Architectures for Cross-Domain Decision Support: A Comparative Study of Insight Frameworks in Healthcare and Retail.” By surfacing commonalities and differences in analytics consumption across sectors, he offers a model that blends domain constraints with adaptable architectural principles, allowing teams to repurpose proven designs in new contexts.

Privacy by Design in AI Workflows

In “Unified Data Governance: Embedding Privacy by Design into AI Model Pipelines,” Kodakandla addresses the challenge of tracking sensitive data across multi-hop AI workflows. He introduces a governance approach for detecting, flagging, and remediating privacy risks in real time, even as data is transformed or embedded. This aligns with his broader focus on AI-augmented privacy, where large language models and sensitive data classification work together to automate remediation without slowing the business.

These ideas also inform his recent enterprise efforts, where he has advanced AI-augmented data privacy frameworks and generative AI techniques for sensitive-data detection. The work has been recognized through internal hackathon accolades, reflecting the practical value of privacy-aware AI in production settings.

Real-Time Intelligence and Modern DataOps

Kodakandla’s engineering philosophy centers on reusability and reliability. He has built real-time analytics pipelines using technologies such as Kafka, Spark Streaming, and BigQuery, enabling responses to operational anomalies, demand shifts, and customer signals in milliseconds. These pipelines are designed to feed AI models for personalization and decision automation, turning data into both operational intelligence and strategic advantage.

To keep systems dependable, he embeds Airflow and cloud-native orchestration for SLA-aware scheduling, automated remediation, and granular lineage. In healthcare settings, he has engineered ETL with access controls, change data capture, and role-based auditing, supporting critical products such as provider compensation models while meeting governance standards.

Leadership, Mentorship, and What’s Next

Beyond systems and papers, Kodakandla is a mentor and strategist. He translates architectural principles into practices that teams can adopt quickly, accelerating delivery while building shared understanding across engineering, analytics, and product.

Looking forward, his priorities include cross-cloud scalability, AI enablement, and data democratization. He envisions AI-native frameworks that embed sensitive-data detection at ingestion, as well as self-healing pipelines that adapt to usage patterns, cost pressures, and policy constraints, so organizations can innovate responsibly at scale.