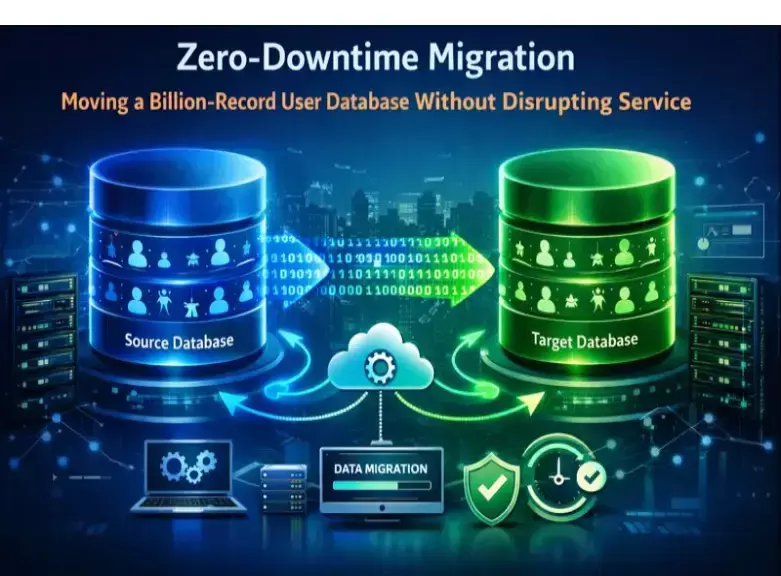

Zero‑Downtime Migration: Moving a Billion‑Record User Database Without Disrupting Service

Learn how zero-downtime migration enables the seamless transfer of a billion-record user database without disrupting service, ensuring reliability, scalability, and data integrity.

By Sumit Saha, Software Engineer at a BigTech

Downtime is a major loss for every business out there, especially when users demand speed and constant availability. However, a situation may arise when the database supporting application gets choked from your application traffic? What happens then? This is a similar situation we encountered while scaling a system with over a billion users.

In this article I will describe how we moved a production database with no downtime while keeping users logged in, transactions flowing, and business running as usual. Before jumping into how we did it, it’s worth asking, why does zero-downtime even matter so much in modern architecture?

Why Zero-Downtime Matters, More Than Ever

In today’s web application development, particularly in SaaS or consumer apps, downtime translates to lost revenue, broken trust, and SLA violations. When I refer to “zero-downtime,” I mean a migration process where:

- User-facing endpoints remain fully accessible

- No incomplete transactions

- No broken sessions or corrupted data

- No corrupted data

Building systems to support hundreds of thousands of concurrent users makes it absolutely clear, simple “scheduled maintenance” is a risk you can’t afford. With that goal in mind, let me walk you through the challenge we faced—and how we planned to overcome it.

The Problem: Monolithic DB Under Pressure

We were using a monolithic setup of Postgres with read replicas; however, over time, the schema became a bottleneck. An increasing number of sessions would require a write, which would need to be followed by analytic queries and cron jobs, which would put IOPS through the roof. The two goals we faced were:

1.Transition to a more horizontally scalable system in this case, distributed Postgres.

2. Positive transition with no downtimes or performance impacts.

The solution required a phased migration strategy, starting with isolating reads and writes to give us control over database access.

Step 1: Introduce a Read-Write Proxy Layer

The very first thing we did was create a proxy interface around our database calls. This is somewhat akin to creating a small-scale ORM with awareness of reading and writing. All write requests were marked and routed to the main database, and reads were handled by the replicas. This allowed us precise control during the initial stages of migration since we could reroute operations progressively.

Having a clean and solid abstraction layer in code is tremendously helpful at this point. Unmanaged and scattered queries increase the amount of work for this single step to weeks. Once we had control over read/write traffic, the next step was to keep both systems in sync, without risking data integrity.

Step 2: Dual Writes with Safety Nets

Our approach started with implementing dual writes. For some of our higher traffic models, we did dual writes to both the old and new databases. But this approach can be risky—what happens if one of the writes fails? In our case, we put in place a logging mechanism that flagged where writes failed. We logged the failure and put the discrepancies in a queue. These discrepancies could be resolved in the background without holding up the main process.

Take it from a developer Insight: I ensured that every dual-write function had idempotency baked in. This ensured that executing the same function multiple times had no negative impact. This made retries safe and the outcome expected. With dual writes keeping the new system updated in real-time, we turned to the heavier lift—migrating the backlog of historical data.

Step 3: Asynchronous Data Backfill

You can't copy a billion records in one go—at least not without breaking something. Think of a database as crossing a river by stepping on stones: when each stone is a one-thousand record chunk and you need to mark the stone as “migrated” to step safely. That's the approach we took by setting a worker queue to handle one-thousand record chunks and marking each “migrated” to fully make database usage as efficient as possible. To avoid “hitting” the database with too much traffic, we combined kafka and batch processing.

With a “warmed up” database, we focused on active users as a priority. That way, the most valuable and important records are “fetched” in the most efficient manner. With the new database warmed up and tested, we began the careful process of shifting live traffic, gradually and safely.

Step 4: Feature Flags for Safe Cutover

At 95% success rate of our writes in the new system and reads showed parity, we enabled a feature flag to switch to the new database for a small portion of traffic. We did this using LaunchDarkly. As our confidence increased, we extended the rollout to 100%.

If you haven't started using feature flags for infrastructure changes, this is your sign. It changes everything from a constant gamble to a methodical approach. Switching over is just one half of the process. The other half is verifying that it worked—and being prepared for what might break.

Step 5: Post-Migration Verification

Our job wasn’t done until we verified the following:

- Snapshot comparisons between old and new DBs

- Query performance benchmarks

- Fallback support in case we needed to roll back

We also left read-only access to the old system live for two weeks—just in case we needed to run forensic checks. After everything was in place, we reflected on what made this migration successful—and what we’d do differently next time.

Lessons Learned

- Start with abstraction. Your migration is only as smooth as your system’s modularity.

- Test for reality. Load test every read, write, and edge case—not just happy paths.

- Keep observability high. Logs, metrics, tracing—this isn't optional when migrating live systems.

- Design for humans. Developers fear migrations because they’ve been burned before. Build tooling that makes it safe and explainable.

Final Thoughts

These takeaways proved essential, but the broader lesson was that relocating a billion-user database is not a casual weekend task; it is a milestone in engineering achievement. However, it is completely achievable with the proper tools, frameworks, and attitude, all while preserving the user experience.

My two cents after training thousands of developers at Sumit’s platform is that zero downtime is not a marketing term; it is a genuine phrase based out of concern for the users, team, and the developers themselves.

Written by Sumit Saha, Software Engineer at BigTech.