Time for responsible Artificial Intelligence

Time for responsible Artificial Intelligence

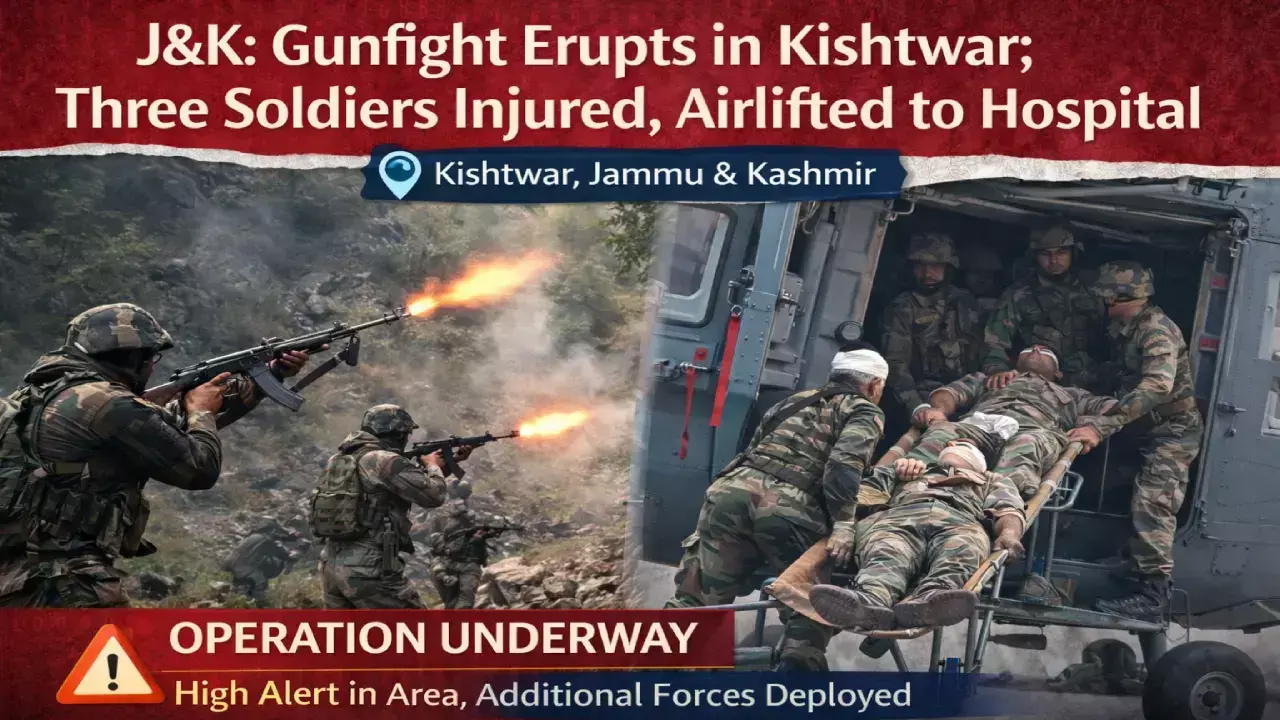

Amazon, in 2018, closed down an AI-based recruiting tool after the e-commerce major figured the tool was biased against women

If you can recollect situations when you were in the school cricket team, and the coach/sports teacher picked only the tallest kid to be the captain. It felt so unfair to others who might have better leadership skills but overlooked due to a silly bias.

This inbuilt bias happens across society, be it against women, darker-skinned people or non-English speakers. It feels unfair, but we have come to accept this as a way of life for the most part.

Artificial Intelligence (AI) is gearing up to make the world a better place to live for generations to come with all its bells and whistles. When you use AI to support critical decisions based on sensitive data, you need to be sure that you understand what AI is doing and why.

Is it making accurate, bias-neutral decisions, or is it violating anyone's privacy? You do have to recognize that the AI algorithm is not a heuristic algorithm based on if-then-else logic but based on responses from real people.

And if these people all belong to the same sect, region or religion, they could carry an inherent bias, which will subsequently transmit into the AI engine for the rest of its working life.

AI is well-positioned to make us rethink the world as we know it today. It is expected to add more than $15 trillion to the global economy by 2030, sparking significant change across industries. By 2022, over 60 per cent of companies will have implemented machine learning, big data analytics, and related AI tools into their operations.

AI brings an enormous opportunity to push us forward as a society, but great potential comes with significant risks. Unquestionable belief in technology and AI is causing us to face a lot of uncomfortable outcomes. For instance, biased AI.

Amazon, in 2018, closed down a recruiting tool after it figured the tool was biased against women. Clearly, they had no intention of having that bias in the system. It seems that tool was created using data that had the built-in gender bias in it. The net result being it crept into the results.

That said about bias, and then there is inappropriate AI like the Chinese social credit system. Which, in my opinion, is against the fundamental rights of the human race. The big brother is watching and rating citizens based on their behaviour in the real world, such as jaywalking, smoking in non-smoking areas, to determine if you are worthy of getting credit to buy a car or a home.

It is too intrusive and violating privacy in a big way. And they believe by next year, there will be 1.4 billion people who live in China with a social credit score. Unknowable AI is another hard one to digest. It is sometimes called the "Black box" as well, indicating no visibility into how certain system decisions were taken.

As humans, we would like to know why a decision was taken, especially when things go wrong. Having no insights into that can be considered irresponsible, especially in medical AI, where the lives of people are at stake.

It is in our best interest that notions of ethical and accountable artificial intelligence (AI) be introduced into the systems implemented by industry, civil society and the government.

The creators of AI systems surely have the added responsibilities of guiding the development and implementing AI in ways that are in sync with our social values.

(The author is Chairman and CEO of Hyderabad-based Brightcom Group)